"Computers in the future may ... weigh no more than 1.5 tons."

– Popular Mechanics, 1949

This is a computer from 1965. When it was first sold, it was as tall as today's average American citizen, cost $28,500 to buy and had a memory of 4,096 words — 8 kilobytes.

Let's say that you are reading this article on an iPhone 6s with 16 gigabytes of memory. The cute little box which fits in your palm fits over 2,000,000 times more information2,000,000 times more information into almost 20,000 times less space and is over 50 times cheaper — not counting inflation. For that screamin' deal, you can thank Moore's Law.

In the same year that you could buy the computer shown above, a man named Gordon Moore — who would later co-found the company Intel — wrote a paper about an interesting observation he noticed in computing power. While working to create computer chips, he found that the number of parts that could be put onto a single chip was doubling every two years.

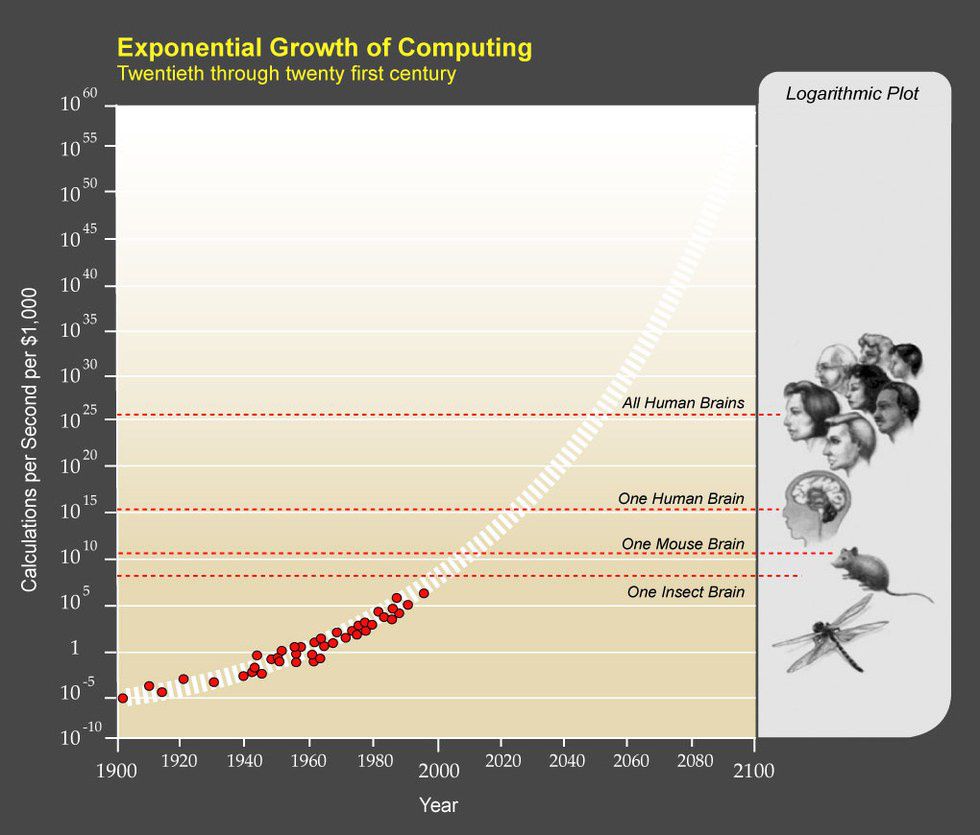

Moore said that there was "no reason to believe it will not remain constant for at least 10 years." However, prominent futurist and real-life mad genius artificial intelligence expert Ray Kurzweil found that Moore's Law holds up even if you extend it to cover the entire 20th century. Just for fun, or more likely as a basis for his book, Kurzweil went even further and decided to extrapolate Moore's Law another full century into the future. He then compared the processing power of computers with that of brains and plopped all of it into this neat little chart:

Kurzweil became a leading voice in predicting the "technological singularity," a hypothetical point in the future where an artificial intelligence starts improving itself at such a fast rate that it surpasses all human intelligence. It can be kind of scary to imagine a computer becoming that powerful, even though researchers are hard at work making sure it would not go crazy as artificial intelligences do so often in the movies. At the point where the computer's self-improvement would really take off, all of our old rules about the world and human society may no longer apply. The benefits something like that could offer, though, cannot be understated. No disease would be incurable, no problem insurmountable for us. After the singularity, technology would be integrated into biology, opening up whole new worlds of possibilities for how we can live while challenging the definition of humanity. Kurzweil himself describes some of the other benefits of the singularity:

Intelligent nanorobots will be deeply integrated in our bodies, our brains, and our environment, overcoming pollution and poverty, providing vastly extended longevity, full-immersion virtual reality incorporating all of the senses (like “The Matrix”), “experience beaming” (like “Being John Malkovich”), and vastly enhanced human intelligence. The result will be an intimate merger between the technology-creating species and the technological evolutionary process it spawned.

Ray Kurzweil thinks that this will happen in less than 20 years.

One major hitch in Kurzweil's prediction, though, is that Moore's Law appears to be grinding to a halt. For many years, prophets of doom have loudly proclaimed that Moore's Law is finally at its end. As the vice-president of Microsoft Research joked, "[t]here’s a law about Moore’s law ... The number of people predicting the death of Moore’s law doubles every two years." But this time the naysayers are right. Intel, the company which has enforced Moore's Law for the last 50, is slowing down its chip development because quantum mechanics is getting in its way.

As I described a few weeks ago, the smaller something is, the less certain its location is, and the more likely it is to "teleport" over any barrier it encounters in a phenomenon known as "quantum tunneling." As Intel has made its computer chips smaller and smaller, there is an increasing chance that electrical particles in those chips will hop right across any barriers that Intel makes.

So, is that it? Is humanity forever doomed to buy iPhones with the same speed and memory for the same price? Not so fast. Researchers have come up with several different workarounds to maintain our enjoyable speed of technological progress.

Quantum physics may have killed Moore's Law, but it also provides a potential way to bring it back to life: quantum computing. Classical computers use classical bits, which are pieces of information which can be either 0 or 1. Quantum computers use qubits (quantum bits), which can be 1 and 0 at the same time since they have a certain probability of becoming 1 or 0 once they are measured. Each qubit represents these two probabilities — two numbers instead of one. To describe two classical bits, you need two numbers, but to describe two qubits, you need four numbers, and so on. If you have 300 qubits, then you have more bits than there are particles in the known universe!

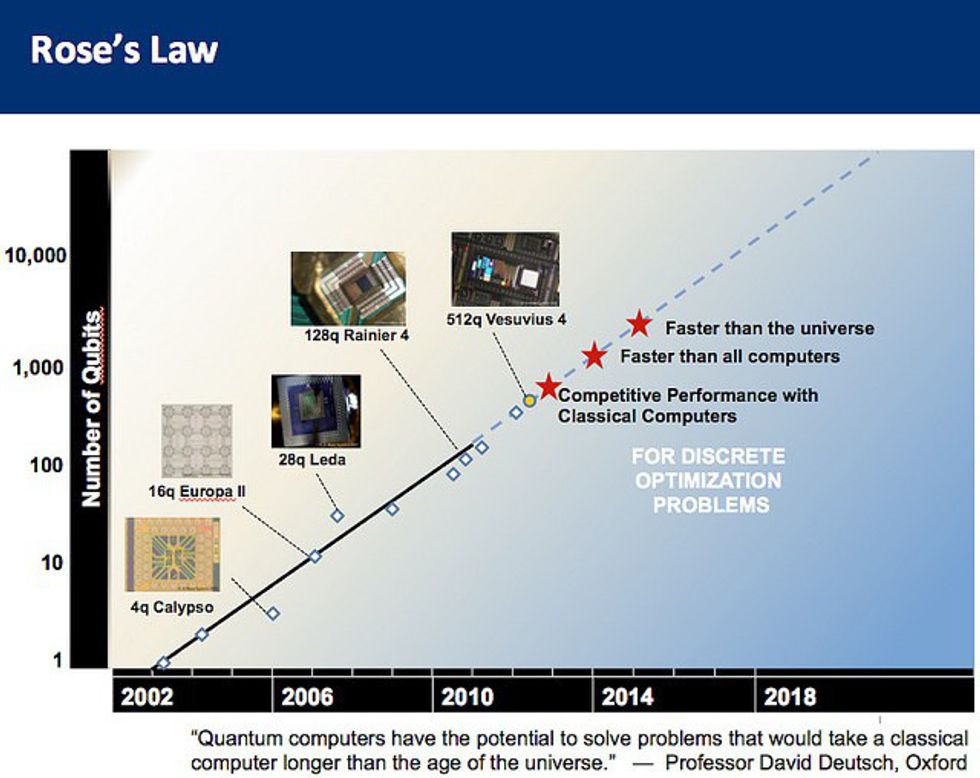

Pretty soon, the exponential improvement of quantum computing will be a huge game-changer. Quantum computers have existed since 1998 and have been developing ever since. Moore's Law may be dead, but Rose's Law has risen in its place, named after the prediction by Geordie Rose from the company D-Wave that "every two years, it will quadruple the number of qubits ... its computers run on, 'more or less like clockwork.'" While the predictions about quantum computers' performance (shown as red stars) are apparently lagging by a few years, the predictions about number of qubits (shown as blue diamonds) are keeping up with Rose's Law quite well:

Can quantum computing save Moore's Law by completely replacing it with Rose's Law? Well ... not quite. There is a pretty big catch. As soon as a qubit is measured, it needs to make up its mind about being 1 or 0, so both of its probability values disappear. Qubits can be used for certain types of calculations but not for storing information. They exponentially reduce the number of certain very specific types of calculations, but they cannot make calculations faster:

Think of it as an application-specific processor, tuned to perform one task — solving discrete optimization problems. This happens to map to many real world applications, from finance to molecular modeling to machine learning, but it is not going to change our current personal computing tasks. In the near term, assume it will apply to scientific supercomputing tasks and commercial optimization tasks where a heuristic may suffice today, and perhaps it will be lurking in the shadows of an Internet giant’s data center improving image recognition and other forms of near-AI magic.

Fortunately, quantum computing is not the only available solution. Other suggestions include making chips out of better materials than silicon (including DNA!), using algorithms based on the brain which improve themselves, and using crazy physics tricks like liquid light, stopping light, and "vortex lasers" which bend light. All of these possibilities will probably be pursued in the near future. Moore's Law may die, but technology marches on.

For more information on quantum computing, check out some of these Futurism articles:

Google’s Quantum Computer May be Superior to Conventional Computers by 2018

Qubit Solution Brings Us Closer to a New Era in Computing

The First Reprogrammable Quantum Computer Has Been Created

The Future of Quantum Computing: Electron Holes