Anyone who’s ever seen the "Terminator" series knows of the ruthless Artificial Intelligence (AI) system known as Skynet. This program was entrusted with the safety of the United States and given command over all computerized military equipment, including nuclear missiles. It eventually reached the conclusion that humanity would try to shut it down, and subsequently unleashed nuclear armageddon on the entire human race. Although this makes for great movies, the field of computer science is divided on the influence an AI would have on the world.

Usually, the debate centers around the idea of instilling values in the program like you would a child. Essentially, you would raise the newborn AI with certain parameters for its existence, such as not to harm humans or destroy the Earth’s nature (although highly ironic). But several theorists have postulated that as the AI matured it would override these values in the name of efficiency. Nick Bostrom, director of The Future of Humanity Institute at Oxford University, puts forth one interesting notion of this efficiency obsession in his interview with Salon.

“How could an AI make sure that there would be as many paper clips as possible?” asks Bostrom. “One thing it would do is make sure that humans didn’t switch it off, because then there would be fewer paper clips. So it might get rid of humans right away, because they could pose a threat. Also, you would want as many resources as possible, because they could be used to make paper clips. Like, for example, the atoms in human bodies.”

Some of the world’s most prominent minds have perpetuated an image of the looming rule of super-intelligent machines. Elon Musk, CEO and CTO of SpaceX, comments that AI research could be like "summoning the demon." Bill Gates, co-founder of Microsoft, said he is “in the camp that is concerned about super intelligence." Professor Stephen Hawking took a more evolutionary explanation of his concerns:

“The primitive forms of artificial intelligence we already have, have proved very useful. But I think the development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence it would take off on its own and redesign itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete and would be superseded.”

The good news is that current technologies prove we are considerably further from this self-augmenting artificial intelligence than most believe. Technologies, like Siri, are far from our image of the learning machine. If you ask her a question that’s not in her repertoire, she won’t understand you or be able to figure out what you mean. You see, a technology like Apple’s voice recognition assistant are only programmed to understand a specific set of inputs and give a specific set of outputs.

The point of true artificial intelligence is that the entities learn the meanings of things around them. The cutting edge software of today represents certain aspects of being human, but nothing near the capabilities of Skynet or HAL exist just yet.

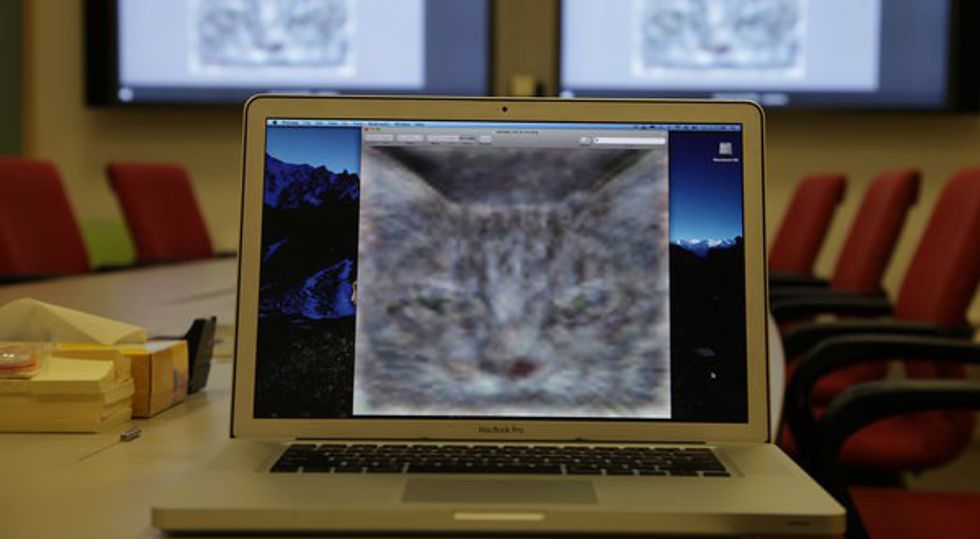

Another infant technology in the field of AI that shows great promise is the development of "deep learning" algorithms. These programs analyze massive amounts of data and generate extrapolations from the data. One example of deep learning can be found in Google’s X lab. One of the lab’s programs created a neural network (essentially a partially simulated brain) to recognize images in YouTube videos. The researchers presented the interface with images of cats from the videos. Here’s what it thought a cat looked like.

However, it took 16,000 computers and 10 million images to produce even this rudimentary understanding of what a cat looks like. Although this represents a remarkable achievement, it emulates only a subhuman sensory experience. It fails to gain any contextual meaning from the image, merely the appearance of an animal. If such a simple task for children is so complex for computers, this should tell you something about the difficulty of creating intelligent machines.

So fear not, Skynet isn’t coming for you, at least not yet.

man running in forestPhoto by

man running in forestPhoto by