The integration of Artificial Intelligence into our lives is imminent. Not even imminent, considering how much it’s already involved in the background of our lives. From trading finances to medical diagnoses to bluetooth speakers, whether we like it or are even aware of it or not, the dawn of the everyday artificial intelligence has already broke. At this point, it’s just a matter of when the next unprecedented software reaches our fingers and how quickly we are willing to embrace it.

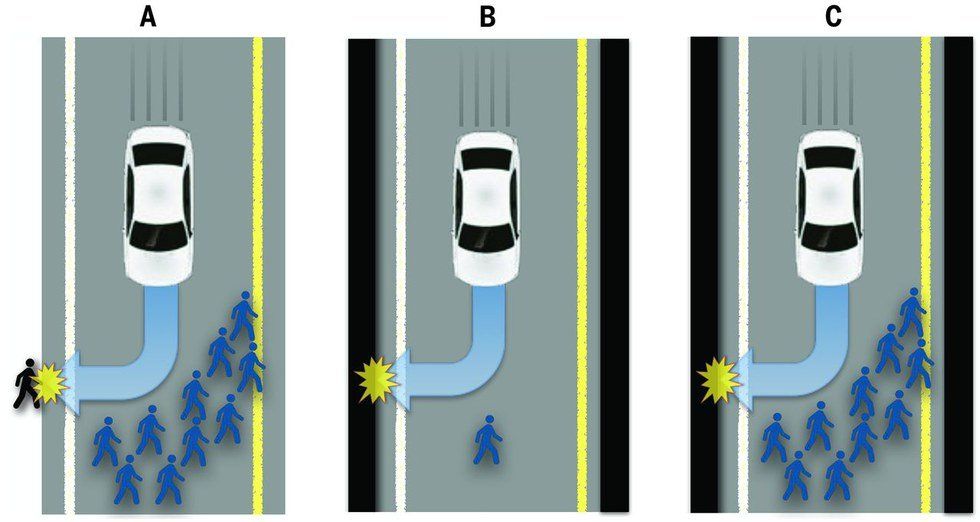

Because when it comes to finance trading and speakers, the novelty, let alone efficiency of it all is usually enough to sell us on the next AI system. But what about when this technology is running something that could be potentially dangerous, like a vehicle? Will we be quick to adopt or trepidacious? Well, like I said earlier, it’s already here. Self driving cars are on the road. Grade four trains (trains that require no human staff to operate in their entirety) are on tracks in the United States, across the globe, and even right down the road at O'Hare International Airport in Chicago.**** How about airplanes? Well, Tim Robinson, The editor-in-Chief of the Royal Aeronautical Society’s Aerospace magazine said in a BBC article this week “So with pilots relying on autopilots for 95% of today’s flights, the argument goes, why not make the other 5%-takeoff and landing- automated?”** Now with that being said, how many of us would want to voluntarily fly in a completely pilot-free airplane? What about even ride in a driverless car? A survey this year from AAA announced that three in four Americans are “afraid” of driving in self driving cars.*** We say that here and now, but how long before it becomes commonplace? When we can trust that it’s safer, more efficient, or just too convenient? Earlier this summer, the weekly international journal Science posted an article that used some very interesting figures and data amongst other mediums to ask a very interesting question of a different kind of dilemma in AI. A “Social Dilemma” in the driverless car.* The article begs the question: Given imminent unavoidable harm, should the AI operating a driverless car be programmed to always protect its passenger or protect as many lives as possible? It lays out some scenario’s: If danger is imminent and unavoidable and the car is heading for an intersection and the car can either hit a pedestrian or swerve and crash the car, which decision should it make? Should it always protect the driver, although you could argue the more noble thing would be to save that pedestrian’s life? What about if the car is headed for a crowd of pedestrians, maybe some doctors and moms in there, should it still protect the driver, or should it try to save as many lives as possible? Can we even apply these ethics to the situation or should this be an entirely different conversation given this brand new set of circumstance? There are many other scenario’s in the article that can certainly stir up some conversations. These questions may seem hypothetic, and they are, but they are pertinent. When we discuss these achievements in technology and the ones on the horizon, sometimes in real life application there are both logistic as well as these ethical questions to consider. Not only to consider them, but also to consider if we can even talk about these things in human ethical terms or if things are even more gray. These are questions we need to think about today, hey, maybe even ask Siri?

http://science.sciencemag.org/content/352/6293/157... (*)

http://www.bbc.com/future/story/20160912-would-you... (**)

man running in forestPhoto by

man running in forestPhoto by