My body, my rules. It's as simple as that. I would like to get this message across loud and clear for all to hear. What I choose to put on my body, whether it's clothing or makeup or ink, is my choice, my business and not anyone else's.

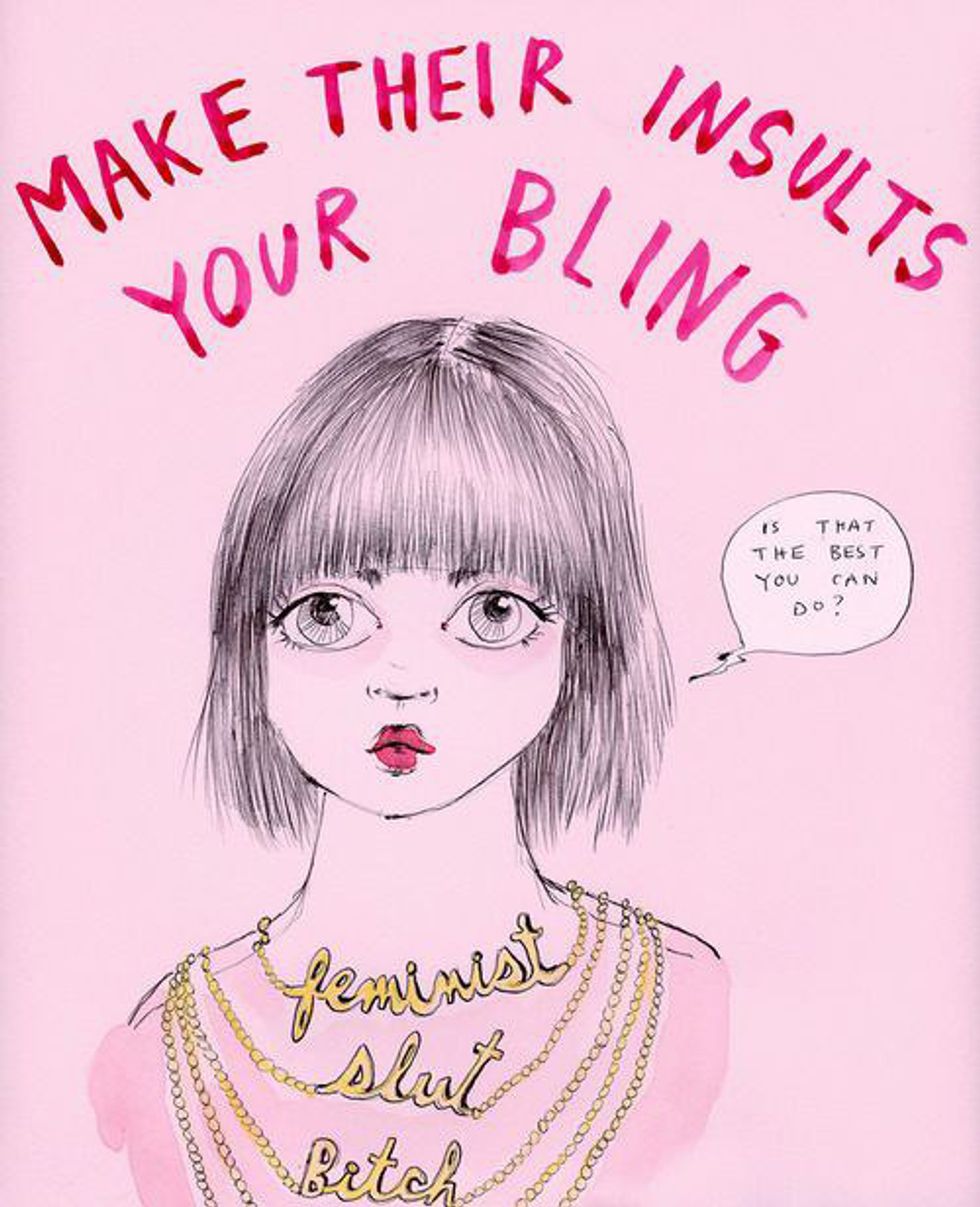

We all have to learn to live with others' negativity. People are going to be rude. That's inevitable. But here's what I'm really trying to get at: women's bodies are policed daily. It's not enough to claim "sticks and stones" any longer. I'm talking about acknowledging and deconstructing the double standard. I'm talking about holding ourselves accountable for the internalized misogyny that we all, regardless of gender identity, have inside.

I think back to being in fifth grade, reading those terrible magazines marketed to me for the sole purpose of making me feel like crap about how I looked. I think about how there were guides on how to dress your body depending on its shape to look the most flattering. Here's an idea: wear whatever the hell you want no matter what your body looks like.

I think back to the years I spent dreading school dances and beach outings with friends because I felt the pressure to wear what everyone else was wearing but knew it would make me feel uncomfortable. I think back to days spent shopping with friends, them throwing dresses at me to try on that were clearly ten sizes too small and feeling humiliated when they wouldn't fit. I think back to all of the seconds, minutes, hours that I've spent in front of a mirror looking at every bump and lump and roll...

I think back to the journal entries middle school me wrote about truly believing that everywhere I went the world was looking down on my body with vicious eyes. I think back to checking the scale every couple of hours praying for a change. I think back to the cycles and cycles of binge eating and then sobbing and saying awful things to myself to absorb the shame and the calories.

This is more than that just a warped image of my body. This is about feeling a lack of control, a lack of self-ownership. Women are taught from birth that their bodies aren't their own. Start with the mundane things like dresses and bathing suits and make-up and move up to bigger things like incredibly limited access to safe abortions and Planned Parenthood shootings and the way society prioritizes the lives of rapists over their victims.

It shouldn't have taken me until the end of my first year of college to really realize that my body is my own and I will wear a bikini to the beach and get a cute tattoo and wear blood red lipstick if I so damn well choose to. It shouldn't have taken me until the end of my first year of college to understand that I am in charge of myself. It shouldn't have taken me until the end of my first year of college to find the root of my fear of intimacy. Let's get one thing straight from all of this: women are the empresses of their own bodies, and what women choose to do or not do is no one else's judgment call but their own.