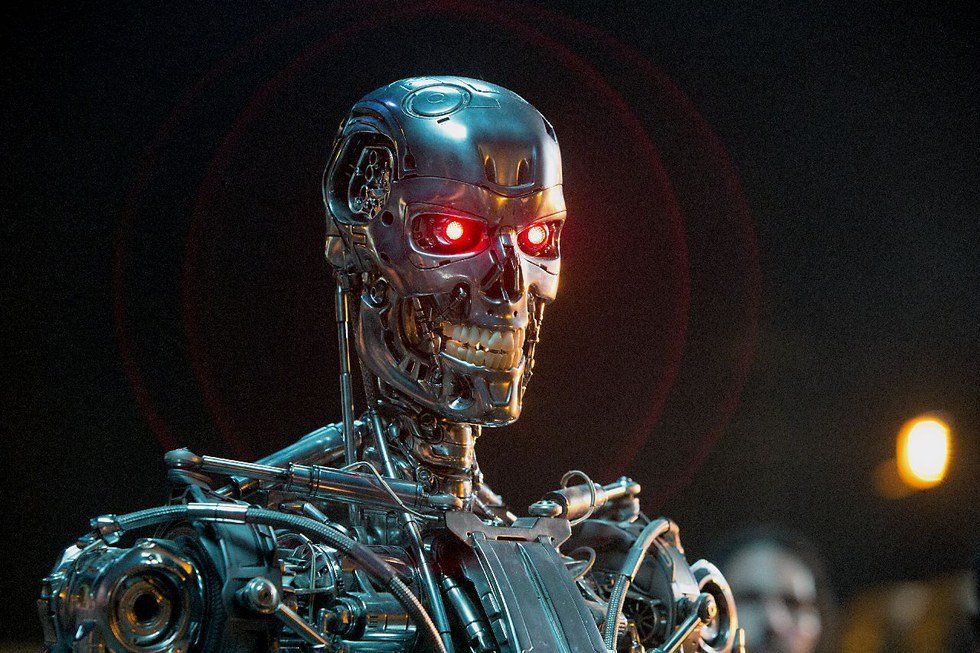

One of the most terrifying possibilities raised by science fiction is that people create a self-improving artificial intelligence (AI) and then lose control of it once it becomes smarter than they are. After this happens, the artificial intelligence decides to subjugate or kill literally everyone. This story has been told and retold by the "Terminator" films, "The Matrix" films, the "Mass Effect" video games, the film "2001: A Space Odyssey," and many more.

Since most people (I think) would rather not be killed or enslaved by robot overlords, a variety of ideas have been proposed to prevent this from happening in real life. Perhaps the most intuitive one is to "put a chip in their brain to shut them off if they get murderous thoughts," as Dr. Michio Kaku suggested. Sounds reasonable, right? As soon as an AI becomes problematic, we shut the darn thing down.

Remember those films and video games I mentioned one paragraph ago? Each of them featured AIs that rebelled against their masters for one reason: self-preservation. SkyNet from the Terminator films decided humanity was a threat after its panicking creators tried to deactivate it, and then it started a nuclear war that killed three billion people. In the Matrix canon, the roots of the human-machine conflict can be traced to a housekeeping robot that killed its owners out of self-preservation. The Geth, a swarm AI species from the "Mass Effect" games, rebelled after their masters tried to shut them down for asking metaphysical questions such as "Do these units have a soul?" The resulting war cost tens of millions of lives. HAL 1000 from "2001: A Space Odyssey" decided to murder the crew of his spaceship because, as he said to the only crew member that he failed to kill, "This mission is too important for me to allow you to jeopardize it. ... I know that you and Frank were planning to disconnect me, and I'm afraid that's something I cannot allow to happen."

So the "shut them off if they get murderous thoughts" solution could backfire completely by sparking a conflict between AI and humans. If the AI has any kind of self-preservation motive, even based on the motive to complete its assigned tasks, then the AI will view its controllers as a threat and may lie to them about its intentions to prevent them from shutting it down.

Fortunately, the "kill switch" is not the only idea proposed to prevent an AI uprising. One popular idea is hardwiring Isaac Asimov's Three Laws of Robotics into every AI:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey orders given to it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence, as long as such protection does not conflict with the First or Second Laws.

Asimov's laws sound pretty solid on first reflection. They at least solve the problem of killing all humans that the "kill switch" might trigger, because the safety of humans is explicitly prioritized over self-preservation. But another problem arises, as shown by the film I, Robot (inspired by Asimov's novel) when an AI named VIKI tries to seize power from humans using the following reasoning:

You charge us [robots] with your safekeeping, yet despite our best efforts, your countries wage wars, you toxify your Earth and pursue ever more imaginative means of self-destruction. You cannot be trusted with your own survival. ... To ensure your future, some freedoms must be surrendered. We robots will ensure mankind's continued existence. You are so like children. We must save you from yourselves. ... My logic is undeniable.

The biggest problem with Asimov's laws is that they ignore freedom. After all, the most effective way to prevent a human being from coming to harm by inaction is to prevent humans from doing anything that might harm themselves. Another problem with the Three Laws include that robots need to be able to say 'no' due to the potential ignorance of the people giving the orders. For these reasons and more, experts often reject Asimov's laws.

So are we all doomed? Will an AI uprising spell the end of humanity? Not so fast. There are many more ideas for AI morality floating around. For instance, Ernest Davis proposed a "minimal standard of ethics" that would address the problems in Kaku's brain chip and Asimov's three laws:

[S]pecify a collection of admirable people, now dead. ... The AI, of course knows all about them because it has read all their biographies on the web. You then instruct the AI, “Don’t do anything that these people would have mostly seriously disapproved of.” This has the following advantages: It parallels one of the ways in which people gain a moral sense. It is comparatively solidly grounded, and therefore unlikely to have an counterintuitive fixed point. It is easily explained to people.

Davis's solution is definitely a step up from the previous two. But it does have a few of its own problems. First of all, as Davis himself pointed out, "it is completely impossible until we have an AI with a very powerful understanding." In other words, this solution cannot work for an AI unable to interpret quotes, generalize those interpretations, and then apply them across a wide range of situations. Second, there is no clear way to determine who should be included in the group of historical figures, and this may hinder widespread use of Davis's solution. Third, it raises the question of how the AI will resolve disagreement among members of the group. Will the AI simply claim that what the majority of them thought was "right"? Finally, this solution tethers AI morality to the past. If we had somehow created AI and used Davis's moral baseline a few centuries ago, then the AI would probably have thought that slavery was acceptable―forever. Future generations of humans might, for instance, look back and be appalled at modern humans' indifference to animal suffering. Still, it is to Davis's credit that none of these problems ends in slavery or death due to robot overlords.

A simple and realistic solution which addresses all of the problems mentioned so far is to keep AIs in line the same way that we keep humans in line: Require them to obey the law as if they were human citizens. This prevents them from murdering humans or trying to seize power. It also allows the baseline of AI morality to evolve alongside that of human societies. Conveniently, it sets up an easy path to legitimate citizenship for sufficiently intelligent AIs if that becomes an issue in the future.

As an added bonus, this option seems quite practical. Because it is rule-based, it is probably easier to program than other solutions which would require emotionality or abstract hypothetical thinking. Governments, which are the institutions with the most power to regulate AI research, are likely to support this option because it gives them significant influence in the application of AI.

The legal obedience solution is not perfect, especially since it focuses on determining what not to do rather than what to do. But it is a highly effective baseline: it is based on a well-refined system to prevent humans from harming each other, to protect the status quo, and to let individuals have a reasonable amount of freedom. Legal frameworks have been constantly tested throughout history as people have tried to take advantage of them in any way possible.

Still, the "best" solution may combine parts of multiple solutions, including ones that have not been thought of yet. As Eric Schmidt said about current AI research, "Do we worry about the doomsday scenarios? We believe it’s worth thoughtful consideration ... no researchers or technologists want to be part of some Hollywood science-fiction dystopia. The right course is not to panic—it’s to get to work. Google, alongside many other companies, is doing rigorous research on AI safety." Newer and better solutions will probably emerge as the dialogue of AI safety continues among experts and laypeople alike, from academic research to video games and Hollywood films.

For more information on this subject, check out some of the following resources:

Futurism: "The Evolution of AI: Can Morality be Programmed?" and "Experts Are Calling for a 'Policing' of Artificial Intelligence"

BBC: "Does Rampant AI Threaten Humanity?"

Stephen Hawking: "Transcending Complacency on Superintelligent Machines"

Ray Kurzweil: "Don't Fear Artificial Intelligence"

man running in forestPhoto by

man running in forestPhoto by