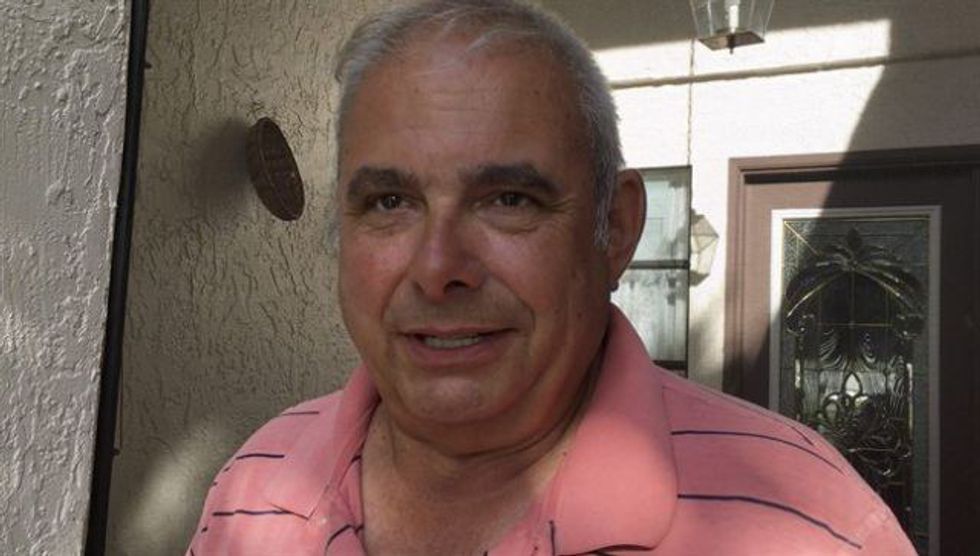

Drinking, texting, and falling asleep while driving are just some of the roadway issues solved by self driving cars, but they are not infallible. On May 7, Joshua D. Brown was killed when a self driving car was unable to distinguish a white tractor trailer from a brightly lit sky. This has sparked an interesting conversation that I never imagined we would be having; how do you design a car to have morals? If there is a crowd of people to your left and right, and someone runs in front of your car, what does it do? Swerve one way or the other, and people will surely die, but it is too late to brake and actually stop in time, and your self driving vehicle now has an important decision to make.

Sophie's Choice in 2016

The "who to kill" moral dilemma is nothing new, although we have never dealt with this idea in regards to autonomous machines. One major example that comes to mind is William Styron's Sophie's Choice (1980). In the story, Sophie and her two children are prisoners in a Nazi concentration camp. A guard tells her that she has to choose which one of her kids will live, and which one will die; if she chooses neither, they will both die. Obviously this is not an easy choice for Sophie, but the added factor that they will both die if she does not choose gives her a moral incentive to select one of her own children to die. The situation we face today is essentially the same; no one wants a car to kill someone, but in a situation where it cannot be stopped, self driving cars will have to possess morally ground decision making skills to best handle the situation.

What About the Passengers?

One major source of debate regarding the issue is what qualifies as a reasonable code of ethics for the car to possess. Should the car be programmed to minimize the loss of life, even if it means possibly killing the passengers, or should the passengers be protected at all costs? It seems like a silly way to look at it, considering minimizing the loss of life seems to be the obvious answer from a moral standpoint; but ordinary cars are involved in such a large number of accidents, that less people buying autonomous cars in the future may cause more deaths overall. Jean-Francois Bonnefon and his colleagues at the Toulouse School of Economics, have conducted studies to see what the public opinion on the matter seems to be. After fielding various scenarios to several hundred users on Amazon's Mechanical Turk program, the general opinion seems to be that limiting the loss of life should take precedence, even if it means killing the driver. There is a bit of a caveat to their findings though, as stated by Bonnefon:

“[Participants] were not as confident that autonomous vehicles would be programmed that way in reality—and for a good reason: they actually wished others to cruise in utilitarian autonomous vehicles, more than they wanted to buy utilitarian autonomous vehicles themselves,"

How Do We Assign Blame for Accidents?

Bonnefon and his colleagues point out another interesting question regarding self driving cars:

“Is it acceptable for an autonomous vehicle to avoid a motorcycle by swerving into a wall, considering that the probability of survival is greater for the passenger of the car, than for the rider of the motorcycle? Should different decisions be made when children are on board, since they both have a longer time ahead of them than adults, and had less agency in being in the car in the first place? If a manufacturer offers different versions of its moral algorithm, and a buyer knowingly chose one of them, is the buyer to blame for the harmful consequences of the algorithm’s decisions?”

This is true, there is no evidence that all autonomous car manufacturers will be required to use the same moral algorithm, so does this mean that producers of autonomous cars are legally liable for accidents caused by them? Experts say yes; in fact some companies, like Volvo, have already declared that they will pay for any injuries or property damage caused by it's upcoming self driving car (set to debut in 2020). For now though, pretty much all autonomous or semi-autonomous cars do require that the user be alert, therefore the blame likely doesn't fall solely on the manufacturer. For example, General Motors upcoming "Super Cruise" technology comes with the caveat that humans must remain alert while it is in use in case something interferes with the vehicles autonomous driving mechanisms.

Final Thoughts

Technology is progressively becoming more and more complex, and consequently, more life like. The idea that a car could drive us around, or possess morals, may have seemed like science fiction years ago, but we stand at the cusp of a major revolution in automobile technology. There have been cases of accidents related to autonomous vehicles, it is not a perfect system just yet, but at the end of the day they only have to drive better than we do, and that isn't very hard.