I could show you the numbers, and they are shocking. But sometimes, statistics don't drive the message home. Study after study after study shows that diversity leads to immense improvements, so for a business, translate that to more money. But again, sometimes studies don't drive the message home either.

So instead, here are some anecdotes of ridiculous incidences which could have been easily fixed by a more diverse team:

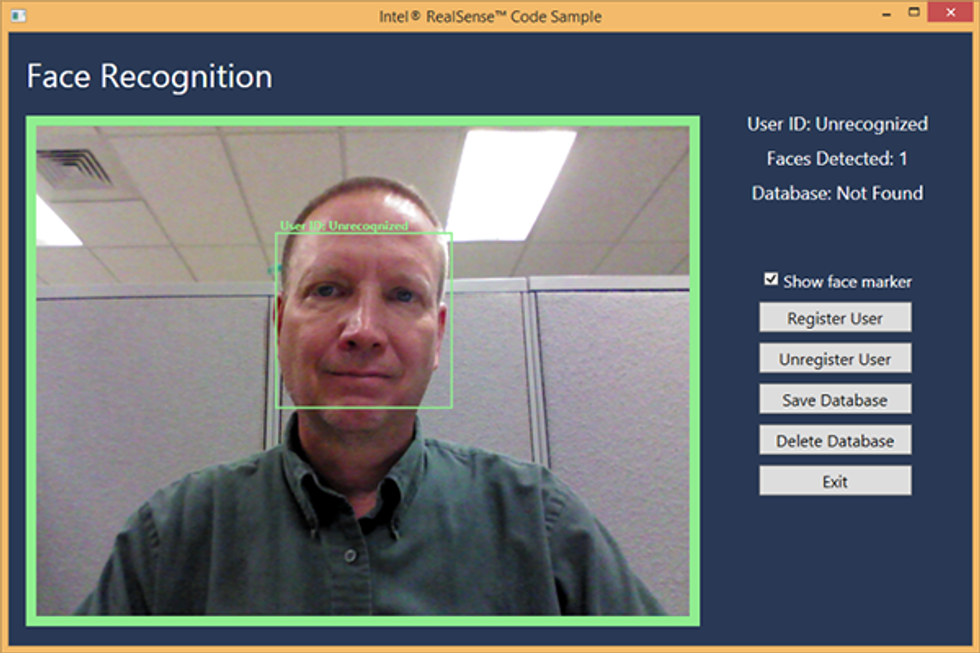

1. Facial recognition software apparently doesn't recognize black faces.

Slack engineer Erica Baker says, "Every time a manufacturer releases a facial-recognition feature in a camera, almost always it can’t recognize black people." Why is this? Because the engineers tend to test it out on themselves and almost all of them are white. If there had been even just one black person on any of the teams working on this, they also could have tried it out on themselves and realized whoops, they have a problem. But no. Now that such technology is on the market, they're realizing their issues and fixing these glitches, but it would have been so easy to not have such problems in the first place.

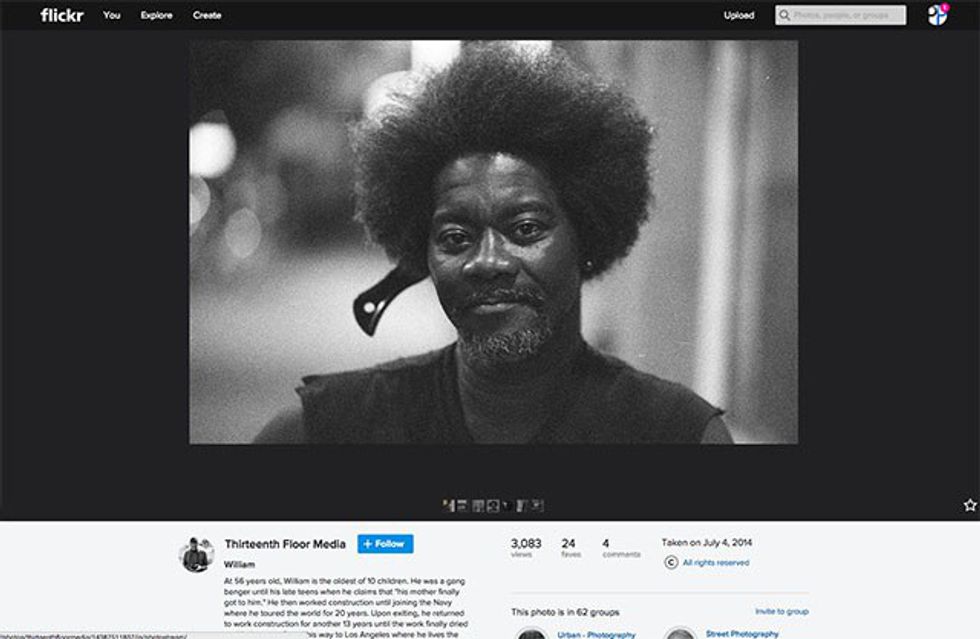

2. Black men = ape?

Yikes! This particular one is from Flickr, but other sites have had similar issues where their software made big auto tagging errors, such as tagging black men as apes. The bad thing is this isn't essential technology. They could have easily taken the time to assemble a diverse team, a diverse group to test it on, etc, and discovered this problem before releasing this feature. But in the race to get as many cool features out as fast as humanly possible, a little thing called "not being racist" isn't deemed important enough to consider.

3. Maybe ad targeting should actually target the real consumers, not outdated stereotypes.

A Carnegie Mellon experiment last year showed that Google's targeted ads were displaying high paying jobs (defined as paying more than $200,000) significantly more to men than to woman (1,800 times to men versus 300 to women). Other studies for other companies have shown similar results in men being targeted more often for the better-paying jobs, or jobs traditionally considered male such as engineering. If the company advertising through Google, Facebook, etc, is unbiased in its hiring practice, then it probably also wants its ads going to the best people, not the people stereotyped as being better.

Also, while targeting ads is certainly legal, gender discrimination is not. This becomes a legally fuzzy area, but why did they make algorithms that were sexist in the first place? No one benefits from that.

4. Having a uterus is still quite problematic.

OK, seriously people? Here's a newsflash for you: about half the world's population has menstrual cycles! With such a number, you would think that would be in the back of any team's mind. But it's not. Every so often I see something that just makes me shake my head, such as this latest one, from astronauts who you would think would have been smart enough to think things through. When they designed technology to deal with reclaiming water from urine, it "wasn’t designed with the possibility in mind that there would be menstrual blood in the mix." Oops. Something that lasts three to five days, less than once a month, for about half the population? That's probably a concept they should have thought about.

At this point, it's rather difficult to go through the entire process again, so they're trying the easier option of running studies on the effect of menstrual suppression (using birth control methods to skip periods entirely) in space. But what if we could have avoided all of this nonsense and just thought about periods in the first place? We'd have probably saved a lot of time, money, and effort that way.

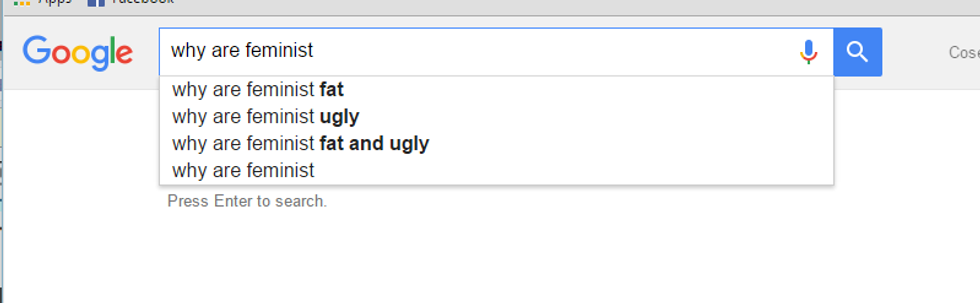

5. Companies don't really think through how software learns.

It's really rather amazing how intelligent software can be. We now have algorithms that learn as you use it. But, this forgets how awful people can be. And do we ever really need an autocomplete like the one above? And I chose this as one of more innocent ones. There's some pretty crappy autocompletes that I do not recommend going and looking for. And chatbots, too, learn from humans, like Microsoft's disastrous "Tay." While I think the idea of software being able to learn as it goes is great, we really need some oversight, not just overeager engineers who push their products through too early.

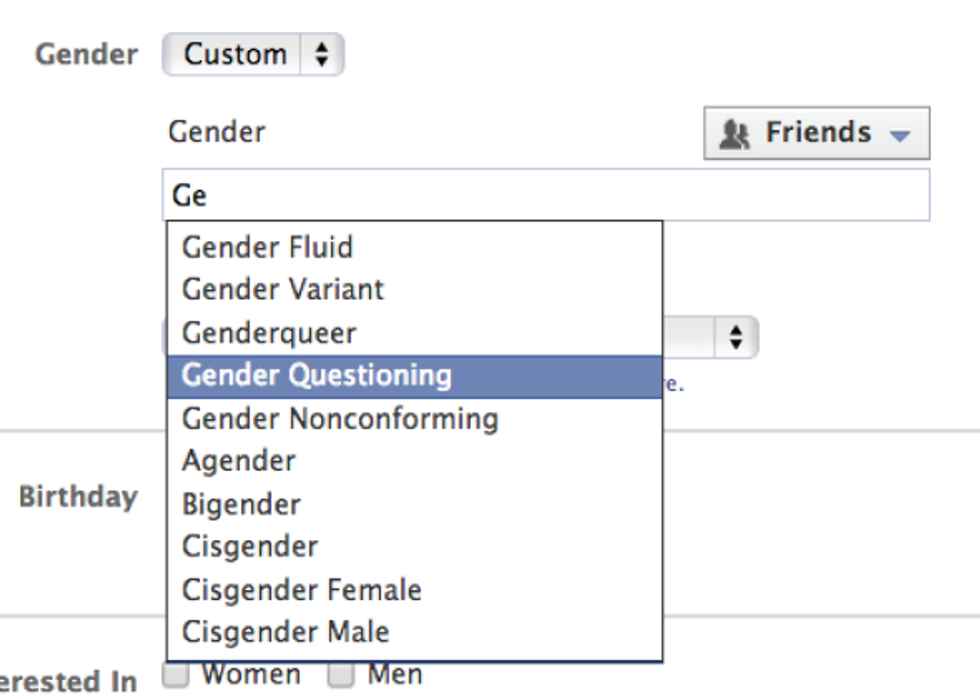

6. Unusual? Disqualified!

Drop-down menus are great when companies need to be able to quickly sort out data. But they are also limiting. The LGBT community is gaining traction, particularly in terms of preferred gender pronouns. Facebook, for example, has increased their options for relationship types and gender. They've still had some issues with their "real name"policy which had a tendency of blocking transgender people, but are trying to improve there too.

But there's so many different nooks and crannies beyond the obvious ones like the transgender issues. Veterans, for example, have had issues with being disqualified for jobs since the software used by the employers didn’t recognize military-related skills. That's a great thank-you for all their service. I'm not saying to stop using drop-down menus, but these really need to be thought through beyond just what people similar to the engineers would need.

These examples aren't nearly an exhaustive list, just a few select incidents I've seen recently. There's probably a million or so going on every single day, negatively affecting people's lives, and really, negatively affecting society in general.

So why the heck can't a white team or an all male team figure this out themselves? That's a bit harder to get at. Part of it is just not thinking. With the "color-blind" or an "I just see people" sort of attitude, we don't actually account for the truth, that there are differences in people. Instead, we just end up with things that work for the majority in power. Another reason is social bias. While there are clearly racist and misogynistic people, the real issue is when social bias is more unconscious. We have no clue we're making assumptions, making a "random" choice not so random, etc. With more diverse teams, there's a better chance of one person going, 'hey, wait a moment' and realizing a problem before it becomes public.