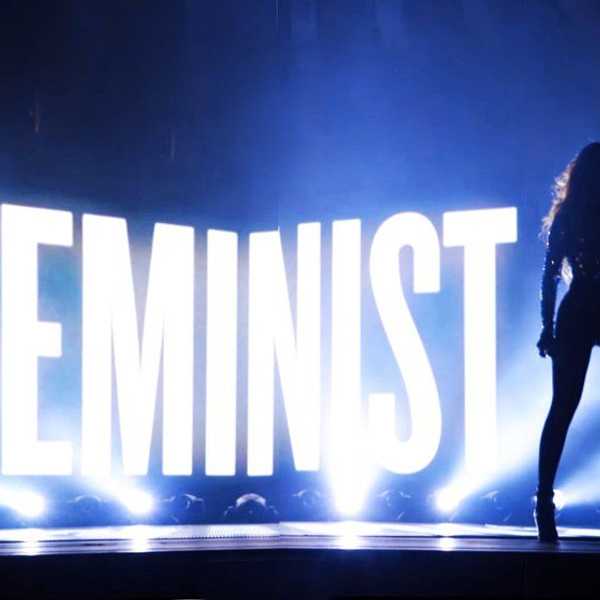

Feminism has been alive and well in America since the 1800s, but what was once used as a rallying cry for women to end injustice for themselves and the generations of women to follow, has turned sour.

To me, feminism means enabling women to achieve their dreams, gain access to healthcare, education and equal opportunities. You'd be hard-pressed to find that a majority of Americans are opposed to this. I personally don't know anyone who is, yet feminism is less about those things than it used to be.

Women in the United States have been made soft by the wave of extreme feminism and politically-correct culture. The very word has been tainted for most people. Many men and women would hesitate to call themselves a feminist just because of the negative connotation the movement has earned itself within the last four or five decades.

Today, American women find oppression in "male-oriented" words such as fireman, mailman and, perhaps the most ridiculous one being hymnal (emphasis on the hym), but phrases such as "mother country" are perfectly unbiased. Let us not forget that throughout history, in most of the world's cultures and languages "man" is a reference to the human race. You are only oppressed by the words of another if you allow yourself to be (sticks and stones, if you will).

Any kindness offered by a male (such as holding the door or offering to assist in carrying a heavy item) is seen as inherently sexist. Ladies, most of us have been taught from little on up that it is polite and kind to help people. Don't assume that a man doing you an unnecessary kindness is him looking down on you–instead, assume he values you enough to care about helping you as a person, not as a woman.

Besides, isn't it sexist to assume that the sole reason he is offering a hand is because you are a woman? That brings me to another point. We need to get over the misconception that you can't be sexist to men. Women can be and, in fact, frequently are sexist to men. Always portraying husbands and fathers as bumbling idiots who wouldn't survive without their wives or mothers is very damaging, both to boys and girls. Misogyny should not be met with misandry.

Despite the often anti-male aspect of feminism in America, there has been an increase in anti-female ideology as well. Feminists are in full support of strong women who want to stay single and climb the corporate ladder, as am I. However, they are less supportive of equally strong women who get married and raise families. As a woman who falls in the latter, I have been told on more than one occasion that I have been "brainwashed by the patriarchy" or that I'm "oppressed" by the men in my life, neither of which are true.

Finally, I come to what will probably be the most unpopular opinion in this article, and that is that American feminism is devaluing the fight against rape culture. Let me start by setting the record straight. Rape is a horrible, rampant problem in the U.S. and in the world. Too often women don't report their rape or sexual assault because they are embarrassed or feel unprotected.

Even one case of this is far too many. However, feminism has lessened its impact on this issue by claiming that everything is rape. The dictionary definition of rape is "unlawful sexual intercourse or any other sexual penetration, with or without force, by a sex organ, other body part, or foreign object, without the consent of the victim."

I have heard feminists claim that if both people are drunk and cannot consent, the woman has been raped. Birth is rape of the mother. By looking at you seemingly inappropriately or for too long, someone can rape you with their eyes. And even that in Christianity, Jesus' mother (a virgin) was raped by God (even though the Bible explicitly details her "yes" to Christ's conception).

Claiming that everything is rape devalues the real horrors of rape victims. We should focus as a society on the plight of women who have been raped, sexually assaulted and abused. Unfortunately, there are so many cases like this in the world that there is no reason to make up new ones. American women have been hurt by this newer, more radical wave of feminism which has spread like wildfire. We have grown unaffected by really devastating issues in the world and in our own country because we have been made soft. We have been told that to live as a woman is to be oppressed. I am happy to say this is no longer true for women in America. You are bound to find your sexist, misogynistic people, but they are no longer the majority in the U.S.

I make this plea to the strong women of this country–be feminist for women who suffer from true oppression.